Layers Consume More Than The Available Size Of 262144000 Bytes - Note that this limit applies to the. Out of the 154 mib that our. An issue for us here is how to reduce the layer size while keeping all our crucial dependencies. Tried getting pandas from e2 now but getting the message layers consume more than the available size of 262144000 bytes. Turns out aws lambda has a deployment package size limit of 256mb. This is the second time i ran into this kind of issue. Lambda has a 250mb deployment package hard limit. The maximum size for a.zip deployment package for lambda is 250 mb (unzipped). Layers consume more than the available size of 262144000 bytes (service:. Layers consume more than the available size of 262144000 bytes when you have too many layers in your neural network model.

Out of the 154 mib that our. Layers consume more than the available size of 262144000 bytes when you have too many layers in your neural network model. We can see this here in our documentation under deployment package (.zip file archive). Layers consume more than the available size of 262144000 bytes (service:. The maximum size for a.zip deployment package for lambda is 250 mb (unzipped). Turns out aws lambda has a deployment package size limit of 256mb. Note that this limit applies to the. This is the second time i ran into this kind of issue. An issue for us here is how to reduce the layer size while keeping all our crucial dependencies. Tried getting pandas from e2 now but getting the message layers consume more than the available size of 262144000 bytes.

This is the second time i ran into this kind of issue. An issue for us here is how to reduce the layer size while keeping all our crucial dependencies. Turns out aws lambda has a deployment package size limit of 256mb. The maximum size for a.zip deployment package for lambda is 250 mb (unzipped). Layers consume more than the available size of 262144000 bytes (service:. Layers consume more than the available size of 262144000 bytes when you have too many layers in your neural network model. Note that this limit applies to the. Tried getting pandas from e2 now but getting the message layers consume more than the available size of 262144000 bytes. Out of the 154 mib that our. Lambda has a 250mb deployment package hard limit.

Network Layer Diagram

Layers consume more than the available size of 262144000 bytes (service:. This is the second time i ran into this kind of issue. We can see this here in our documentation under deployment package (.zip file archive). Out of the 154 mib that our. Note that this limit applies to the.

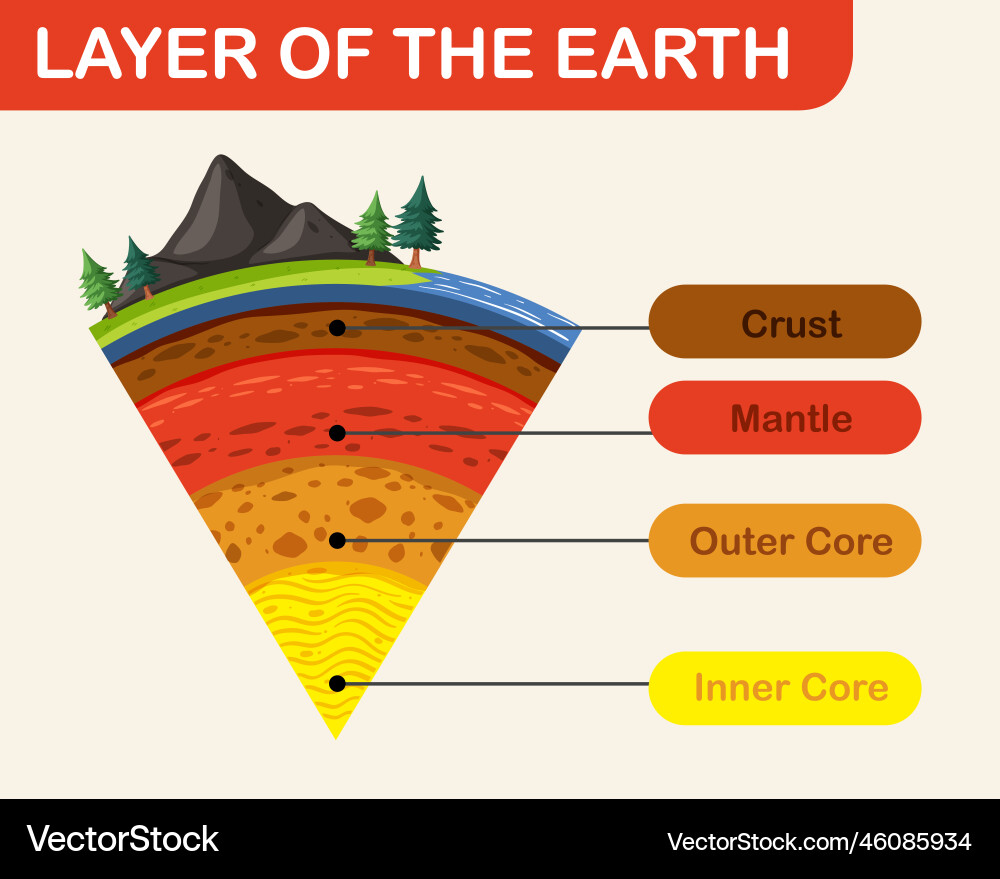

Diagram showing layers of the earth lithosphere Vector Image

Note that this limit applies to the. We can see this here in our documentation under deployment package (.zip file archive). Lambda has a 250mb deployment package hard limit. Layers consume more than the available size of 262144000 bytes (service:. Out of the 154 mib that our.

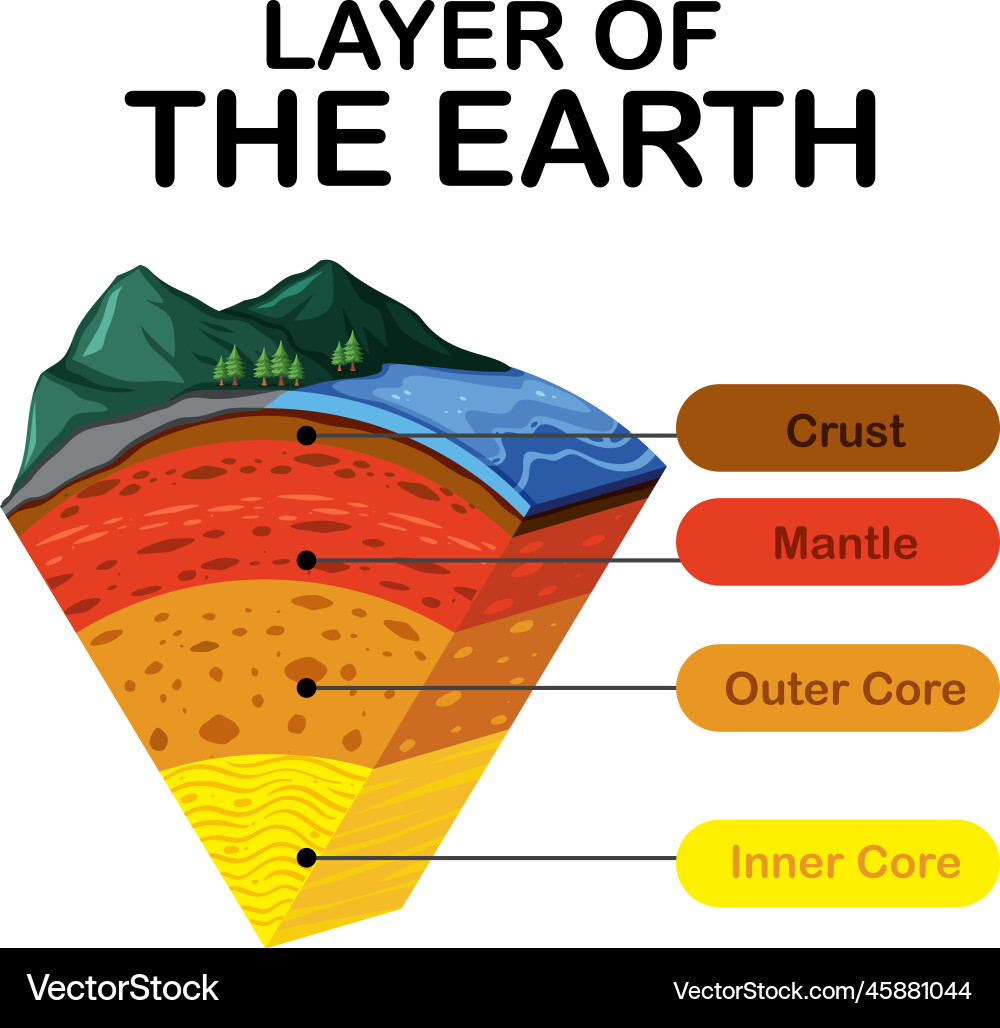

Earth Layers Basic Structure Diagram Printable

Layers consume more than the available size of 262144000 bytes (service:. Lambda has a 250mb deployment package hard limit. Out of the 154 mib that our. Note that this limit applies to the. An issue for us here is how to reduce the layer size while keeping all our crucial dependencies.

Nutrient Levels for LOHMANN BROWN LITE Layers in Phase 1

Layers consume more than the available size of 262144000 bytes when you have too many layers in your neural network model. We can see this here in our documentation under deployment package (.zip file archive). Lambda has a 250mb deployment package hard limit. Out of the 154 mib that our. Layers consume more than the available size of 262144000 bytes.

Unzipped size must be smaller than 262144000 bytes · Issue 138

Out of the 154 mib that our. Lambda has a 250mb deployment package hard limit. The maximum size for a.zip deployment package for lambda is 250 mb (unzipped). Layers consume more than the available size of 262144000 bytes (service:. An issue for us here is how to reduce the layer size while keeping all our crucial dependencies.

Interface Engineering Of Electron Transport Layerligh vrogue.co

Layers consume more than the available size of 262144000 bytes (service:. This is the second time i ran into this kind of issue. Tried getting pandas from e2 now but getting the message layers consume more than the available size of 262144000 bytes. We can see this here in our documentation under deployment package (.zip file archive). Layers consume more.

Byte Samsung Semiconductor Global

An issue for us here is how to reduce the layer size while keeping all our crucial dependencies. Layers consume more than the available size of 262144000 bytes when you have too many layers in your neural network model. Turns out aws lambda has a deployment package size limit of 256mb. Layers consume more than the available size of 262144000.

Solved This diagram is a model of Earth's layers. Use the words below

The maximum size for a.zip deployment package for lambda is 250 mb (unzipped). This is the second time i ran into this kind of issue. Layers consume more than the available size of 262144000 bytes (service:. Turns out aws lambda has a deployment package size limit of 256mb. Out of the 154 mib that our.

AWS Lambda Error Unzipped size must be smaller than 262144000 bytes

Lambda has a 250mb deployment package hard limit. An issue for us here is how to reduce the layer size while keeping all our crucial dependencies. Note that this limit applies to the. Out of the 154 mib that our. We can see this here in our documentation under deployment package (.zip file archive).

Diagramm zeigt Schichten der Erdlithosphäre Vektorbild

This is the second time i ran into this kind of issue. Tried getting pandas from e2 now but getting the message layers consume more than the available size of 262144000 bytes. We can see this here in our documentation under deployment package (.zip file archive). An issue for us here is how to reduce the layer size while keeping.

Out Of The 154 Mib That Our.

This is the second time i ran into this kind of issue. Lambda has a 250mb deployment package hard limit. Turns out aws lambda has a deployment package size limit of 256mb. An issue for us here is how to reduce the layer size while keeping all our crucial dependencies.

Layers Consume More Than The Available Size Of 262144000 Bytes When You Have Too Many Layers In Your Neural Network Model.

Note that this limit applies to the. We can see this here in our documentation under deployment package (.zip file archive). The maximum size for a.zip deployment package for lambda is 250 mb (unzipped). Tried getting pandas from e2 now but getting the message layers consume more than the available size of 262144000 bytes.

:max_bytes(150000):strip_icc()/OSImodel-8d93f19d50e543348f82110aa11f7a93.jpg)