Your Session Crashed After Using All Available Ram. - ,the trick is simple and almost doubles the existing ram of 13gb. After analysing i find that it. A user asks how to fix the error your session crashed after using all available ram in google colab when running a python. I haven’t tried to smaller my dataset loading. I am using google collab (gpu) to run my model but as i create an object of my model, collab crash occurs. Closing tabs, restart colab, using high ram, etc. This can be done by inputting a certain code in the google. But none of them work. I tried lots of method: I finally decided to go ahead with manually iterating through my dataset and that is now causing my runtime to crash.

I tried lots of method: Closing tabs, restart colab, using high ram, etc. I haven’t tried to smaller my dataset loading. I am using google collab (gpu) to run my model but as i create an object of my model, collab crash occurs. A user asks how to fix the error your session crashed after using all available ram in google colab when running a python. I finally decided to go ahead with manually iterating through my dataset and that is now causing my runtime to crash. After analysing i find that it. ,the trick is simple and almost doubles the existing ram of 13gb. This can be done by inputting a certain code in the google. But none of them work.

I haven’t tried to smaller my dataset loading. Closing tabs, restart colab, using high ram, etc. I tried lots of method: This can be done by inputting a certain code in the google. But none of them work. After analysing i find that it. ,the trick is simple and almost doubles the existing ram of 13gb. A user asks how to fix the error your session crashed after using all available ram in google colab when running a python. I finally decided to go ahead with manually iterating through my dataset and that is now causing my runtime to crash. I am using google collab (gpu) to run my model but as i create an object of my model, collab crash occurs.

Your session crashed after using all available RAM · Issue 431

A user asks how to fix the error your session crashed after using all available ram in google colab when running a python. I finally decided to go ahead with manually iterating through my dataset and that is now causing my runtime to crash. I am using google collab (gpu) to run my model but as i create an object.

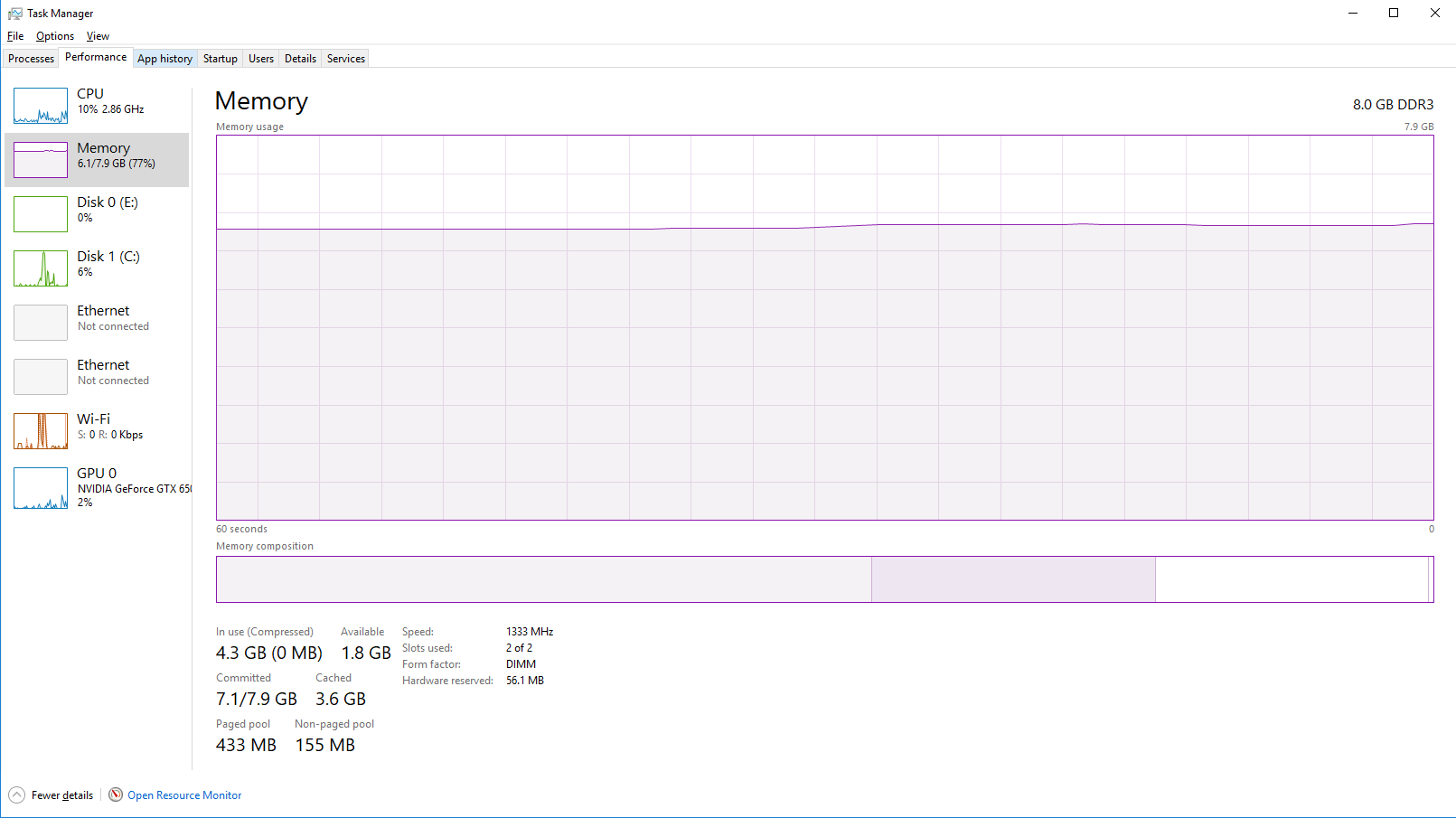

Computer Does Not Use All Available Memory and Crashes Microsoft

Closing tabs, restart colab, using high ram, etc. I am using google collab (gpu) to run my model but as i create an object of my model, collab crash occurs. This can be done by inputting a certain code in the google. A user asks how to fix the error your session crashed after using all available ram in google.

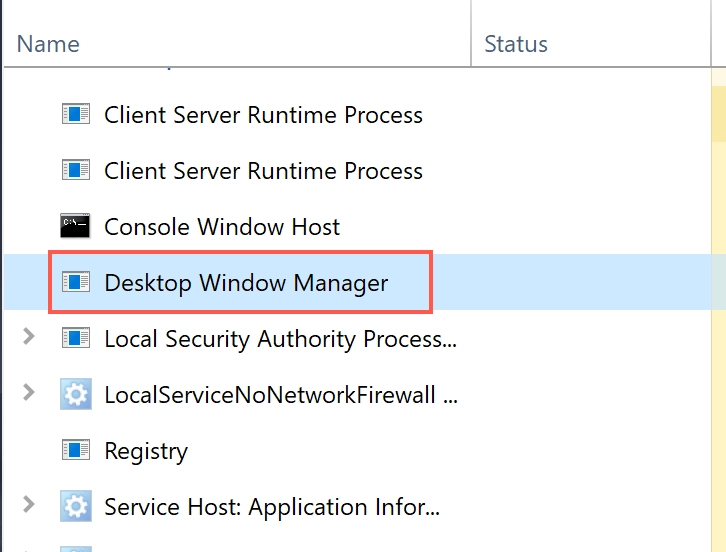

Your Session Was Logged Off Because DWM Crashed [Solved]

I finally decided to go ahead with manually iterating through my dataset and that is now causing my runtime to crash. But none of them work. I am using google collab (gpu) to run my model but as i create an object of my model, collab crash occurs. This can be done by inputting a certain code in the google..

tensorflow Session crash for an unknown reason when using pickle.dump

I finally decided to go ahead with manually iterating through my dataset and that is now causing my runtime to crash. A user asks how to fix the error your session crashed after using all available ram in google colab when running a python. But none of them work. This can be done by inputting a certain code in the.

Your Session Was Logged Off Because DWM Crashed How to Fix

But none of them work. I haven’t tried to smaller my dataset loading. This can be done by inputting a certain code in the google. ,the trick is simple and almost doubles the existing ram of 13gb. I tried lots of method:

[solved] Your session crashed after using all available RAM. Google

After analysing i find that it. I tried lots of method: Closing tabs, restart colab, using high ram, etc. I haven’t tried to smaller my dataset loading. I finally decided to go ahead with manually iterating through my dataset and that is now causing my runtime to crash.

Pc keeps flashing black and crashing when I log in and I keep getting

I tried lots of method: I finally decided to go ahead with manually iterating through my dataset and that is now causing my runtime to crash. This can be done by inputting a certain code in the google. But none of them work. ,the trick is simple and almost doubles the existing ram of 13gb.

'Your session crashed for an unknow reason' when run cell 'set_session

Closing tabs, restart colab, using high ram, etc. I am using google collab (gpu) to run my model but as i create an object of my model, collab crash occurs. But none of them work. After analysing i find that it. ,the trick is simple and almost doubles the existing ram of 13gb.

OUT OF THE RAM????? your session had crashed after using all the

,the trick is simple and almost doubles the existing ram of 13gb. I tried lots of method: Closing tabs, restart colab, using high ram, etc. But none of them work. This can be done by inputting a certain code in the google.

I bought 100 compute units to have more RAM. Nevertheless, my session

This can be done by inputting a certain code in the google. ,the trick is simple and almost doubles the existing ram of 13gb. I tried lots of method: A user asks how to fix the error your session crashed after using all available ram in google colab when running a python. I finally decided to go ahead with manually.

,The Trick Is Simple And Almost Doubles The Existing Ram Of 13Gb.

But none of them work. Closing tabs, restart colab, using high ram, etc. I am using google collab (gpu) to run my model but as i create an object of my model, collab crash occurs. After analysing i find that it.

I Finally Decided To Go Ahead With Manually Iterating Through My Dataset And That Is Now Causing My Runtime To Crash.

This can be done by inputting a certain code in the google. I tried lots of method: A user asks how to fix the error your session crashed after using all available ram in google colab when running a python. I haven’t tried to smaller my dataset loading.

![Your Session Was Logged Off Because DWM Crashed [Solved]](https://gridinsoft.com/blogs/wp-content/uploads/2024/02/Your-Session-Was-Logged-Off.jpg)

![[solved] Your session crashed after using all available RAM. Google](https://i.ytimg.com/vi/6-xs36h8J1U/maxresdefault.jpg?sqp=-oaymwEmCIAKENAF8quKqQMa8AEB-AHUBoAC4AOKAgwIABABGGUgUChXMA8=&rs=AOn4CLC61W2FZMbEjKzuqTPipqERxJ3fFg)